Autoregressive Integrated Moving Average (ARIMA) models are often used for forecasting purposes. These models for time series data have been observed to provide accurate forecasts. Additionally, these models allow dynamic forecasting which can be used to predict future values of time series variables. ARIMA model estimation is easily handled by statistical software packages.

ARIMA model requires the correct specification of autoregressive terms (p), order of integration (d) and moving average terms (q). These models are represented as ARIMA (p, d, q). However, it is generally impossible to know the exact specification of ‘p’, ‘d’ or ‘q’ beforehand. Here, we will discuss the procedure to choose the correct specification of autoregressive terms, moving average terms and order of integration. Finally, we will estimate the ARIMA (p, d, q) with chosen specifications and make forecasts.

Step 1: Determine The Order Of Integration (d)

For illustrating the ARIMA model estimation, we will consider the time series variable “Consumption (Ct)” and use the ARIMA model to forecast consumption. The first step in ARIMA specification is determining the Order of Integration (d) of the consumption (Ct) variable. We can use the Augmented Dickey-Fuller (ADF) Test for this purpose.

Check Stationarity at Level

First, we apply the ADF test to check whether consumption is stationary at the level (i.e. original variable). The results of the test show that consumption is non-stationary. We include the trend in the ADF equation because it was significant, and the results of the test are:

| ADF Test at level | Test Statistic | Critical value (5%) | P-value |

| -0.303 | -3.444 | 0.9895 | |

| Dependent variable – D(Ct) | Variable | Coefficient | P-value |

| Ct-1 | -0.0007493 | 0.762 | |

| trend | 0.3978951 | 0.000 | |

| Lag 1 of D(Ct) | -0.0360474 | 0.663 | |

| Lag 2 of D(Ct) | 0.1101883 | 0.182 | |

| Lag 3 of D(Ct) | 0.1834063 | 0.029 | |

| Constant | -3.410921 | 0.239 |

The ADF test shows that the consumption time series is non-stationary at the level. We can say that because the p-value of the test is 0.9895. For stationarity, this p-value must be significant along with a negative and significant coefficient of Ct-1. However, these conditions are not met.

These results are valid because the trend is significant and must be included in the ADF test. Moreover, we have included 3 lagged differences (Lag 1, 2 and 3 of D(Ct)) in the ADF equation to remove autocorrelation.

Check Stationarity at First Difference

Since consumption is non-stationary at levels, we apply the ADF test at First Differences and the results are as follows:

| ADF Test at First Difference | Test Statistic | Critical value (5%) | P-value |

| -5.375 | -3.444 | 0.0000 | |

| Dependent variable – D(D(Ct)) | Variable | Coefficient | P-value |

| D(Ct-1) | -0.7435092 | 0.000 | |

| trend | 0.3727328 | 0.000 | |

| Lag 1 of D(D(Ct)) | -0.292645 | 0.014 | |

| Lag 2 of D(D(Ct)) | -0.1825719 | 0.029 | |

| Constant | -2.515396 | 0.268 |

From the table above, we can conclude that Consumption (Ct) is stationary at the First difference. This is because the test statistic is significant (p-value = 0.0000) and the coefficient of D(Ct-1) is negative and significant. The ADF equation contains a trend (trend stationary) because it is significant and 2 lagged differences to eliminate autocorrelation.

Therefore, we can say that the consumption variable is Integrated of Order 1 or d = 1.

Step 2: Determine The Order Of Autoregression (p) and Moving Average (q)

After deciding on the Order of Integration (d = 1), we must specify the order of autoregression (p) and moving average (q). To accomplish this, we can use various Information Criteria. Here, we will estimate Akaike’s Information Criteria (AIC) and Schwarz or Bayesian Information Criteria (SIC). You can learn more about AIC and SIC from Information Criteria post.

We will estimate various combinations of ‘p’ and ‘q’ with d = 1 and estimate the Information criteria for each. Therefore, this allows us to compare all the ARIMA models with different specifications.

| Specification | AIC | SIC |

| ARIMA (1,1,0) | 1295.087 | 1304.139 |

| ARIMA (0,1,1) | 1351.886 | 1360.938 |

| ARIMA (1,1,1) | 1229.671 | 1241.74 |

| ARIMA (2,1,1) | 1230.335 | 1245.421 |

| ARIMA (1,1,2) | 1230.518 | 1245.604 |

| ARIMA (2,1,2) | 1232.3 | 1250.404 |

| ARIMA (1,1,3) | 1230.915 | 1249.018 |

We can see that the ARIMA(1,1,1) gives the minimum values of Information Criteria in the case of both AIC and SIC. Therefore, ARIMA(1,1,1) is a good starting point for estimating the ARIMA model.

Step 3: ARIMA Model estimation and diagnostic checking

We estimate the ARIMA(1,1,1) model because the information criteria had the minimum values for this specification. However, diagnostic checks indicated that the model suffered from autocorrelation and the residuals were not white noise. Autocorrelations, partial autocorrelations and conducting the Portmanteau White noise test on the residuals of the model show us the following:

| Portmanteau White Noise test | at lags = 15 |

| Statistic | 29.0503 |

| P-value > chi2(15) | 0.0158 |

We can also plot the autocorrelation and partial autocorrelations, which will show similar results. Hence, we can conclude that the ARIMA (1,1,1) is not appropriate because it suffers from autocorrelation.

Important: The solution to this problem of autocorrelation is to include more autoregressive or moving average terms. Additionally, we observed earlier in the ADF test that consumption was trend stationary as the ‘trend’ variable was significant. Therefore, we can also introduce a trend variable within the ARIMA model for better results.

Step 4: final ARIMA model estimation and forecasting

The final ARIMA model chosen for forecasting purposes should not suffer from autocorrelation. Since the trend is significant for our variable of consumption, we will also include a variable for deterministic trend. Suppose after some trial and error, we choose an ARIMA with 1st and 4th autoregressive lags, 2 moving average terms and a deterministic trend. We can estimate such a model easily using statistical software packages as they allow us to choose the lags we want and skip the ones which are unnecessary. ARIMA models allow us a lot of flexibility. Let us look at the estimated results:

| Observations = 151 | Wald chi2 = 168.15 | |||

| Log-likelihood = -596.8227 | p-value = 0.0000 | |||

| Dependent – D(Ct) | ||||

| Variable | Coefficient | OPG standard error | z | p-value |

| t (time trend) | 0.4936051 | 0.042396 | 11.64 | 0.000 |

| constant | -4.14298 | 4.549389 | -0.91 | 0.362 |

| ARMA terms | ||||

| AR(1) | 0.4505145 | 0.2668463 | 1.69 | 0.091 |

| AR(4) | -0.1400334 | 0.0634091 | -2.21 | 0.027 |

| MA(1) | -0.4774944 | 0.26613 | -1.82 | 0.069 |

| MA(2) | 0.1886453 | 0.0760914 | 2.48 | 0.013 |

Looking at the results, we can see that including the 4th lag of autoregression is giving us a significant p-value on the coefficient. The same is true for the 2nd moving average term. The trend variable ‘t’ is significant as expected. We expect this model to be free from the problem of autocorrelation. To confirm, we can apply the Portmanteau test as we did before:

| Portmanteau White Noise test | at lags = 15 |

| Statistic | 11.3465 |

| P-value > chi2(15) | 0.7277 |

The test suggests that the model does not suffer from autocorrelation as the statistic is not significant. We can also plot the autocorrelations and partial autocorrelations which will show the same results.

Forecasting after ARIMA model estimation

Suppose, we decide to use the above model as the final ARIMA specification. Then, we can use the results of the model for making forecasts. Here, we will estimate dynamic forecasts for 52 quarters. In dynamic forecasts, we use previous forecast values to make current forecasts, that is, we do not use the values of consumption from the sample.

In this example, we will use normal predictions for the first 100 quarters where forecasts will be made using actual sample data on consumption. After 100 quarters, we will switch to dynamic forecasts. This implies that we will use previous forecasted values to make further forecasts in the following quarters (from 101 to 152).

We can calculate the forecast errors to analyze how well the model is doing. Let us look at the results:

| Model | Overall Forecast Error (%) | Forecast Error (%) for Dynamic |

| ARIMA (1,1,1) | 1.49 | 2.98 |

| ARIMA with trend, AR(1,4) and MA(1,2) | 0.74 | 0.81 |

As we can observe, the final model is performing much better as compared to the earlier model of ARIMA (1,1,1). This is because we have accounted for the lags and variables that were excluded earlier and caused autocorrelation.

Even for dynamic forecasts, the final model has a very low per cent forecast error. As compared to overall accuracy, the accuracy of dynamic forecasts does not fall much. This is a good sign for our analysis, indicating that the model is performing well. For the earlier ARIMA (1,1,1) the error is doubled when we switch to dynamic forecasts.

Mean Absolute Percentage Error (MAPE)

Because the aim of ARIMA models is usually forecasting, we can also use forecasting accuracy or forecast error to choose among various ARIMA specifications. We can simply use the model which gives us better forecasts.

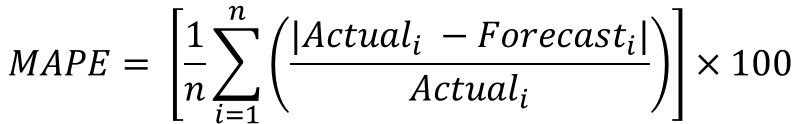

In our example, we have used Mean Absolute Percentage Error (MAPE) to estimate forecasting errors. This can be estimated as:

Where ‘n’ is the total number of observations, ‘Actual’ is the sample consumption value of observation ‘i’ and ‘Forecast’ is the model’s predicted consumption value of ‘i’.

Variations of aRIMA model: ARIMAX, seasonality, sARIMA and SARIMAX

In the basic ARIMA, we estimate and make forecasts based only on the past behaviour of the time series variable. For instance, we included only the autoregressive terms and moving average terms in the ARIMA(1,1,1) above. This is the simplest form of the ARIMA model.

However, we can also introduce independent variables into the ARIMA model. For example, we have the option of including ‘income’ as an independent variable to explain ‘consumption’. Such models are referred to as ARIMAX models. These models include additional independent variables alongside autoregressive and moving average terms. In our example above, we introduced a ‘trend’ variable to account for the deterministic trend alongside AR and MA terms in the final model.

The data on ‘consumption’ used above was seasonally adjusted. This means that seasonality or seasonal variations were removed from the data. Therefore, we did not need to account for any such behaviour within the data. However, we may not always have access to seasonally adjusted data or we might want to study seasonal behaviours in our variables. In such a case, we can include seasonal terms in the ARIMA model. Such models are known as SARIMA models. If we also have additional independent variables along with AR, MA and seasonal terms, the model is known as SARIMAX model.

We will discuss how to recognize seasonal behaviour and the specification of SARIMA models separately.

Econometrics Tutorials with Certificates

This website contains affiliate links. When you make a purchase through these links, we may earn a commission at no additional cost to you.